Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

The advantage above a websocket is, is that you don't have to know the length of the json object and you can full stream, not preparing the whole data in memory client side what sucks if you query a huge resultset from the server. My memory usage went down by 99% server side or so

-

I do, while writing realize that I've could've splitted the json request by a byte that isn't allowed in json and chunked it that way. It would be even faster. But at least my protocol ensures valid data

-

@Lensflare sadly, the name of the parser is rliza. I just gave it the name because it wasn't supposed to be a json parser at the beginning, it was supposed to make json with benefits so didn't want to call it json(++). Rliza is just a brainfart. I didn't take the project so serious to think about a serious name and now it's used in thee other side projects already. A db server, a pubsub server and a python client

-

Hell yea! Sounds awesome, got a link to the git repo? What about fault tolerance?

-

@Chewbanacas I say to much weird shit on this platform to share my github with full name 😂 A bit sad tho, I've around 50 repost of my own, no forks. I'm a mass producer.

What do you mean with fault tolerance in this case? If the json is wrong, it just expects another chunk of data to become valid. It doesn't block wait. It snoops 4096b from your json and hops to the next client to snoop from. It's not literally waiting for the next chunk. I use select() system call in a loop to check if a socket is readable, and if it is, I start his read function again that still remembers the state / already received data. Even with a buffer of 5b, it works perfectly, that means every 5b its the turn for next client. I'll do some performance testing with different buffer sizes for fun but I'm sure 4096 is just the best since messages are mostly smaller than that so it only requires one validation. With 5b, you're validating like a maniac -

@jestdotty you must read the values anyway. You can't read keys without reading value. If you would extract keys first, you have to go second time for the values. To get all values, you have to read all keys as well. So you'll end up literally doing everything twice. Running forward and peek as less as possible is the most efficient way to parse

-

@Lensflare I wonder how much views my video got because the advertisements here. I think most people who take the effort to watch my profile will watch it - why not, you were already interested enough to watch profile. So a video of me would be even better. Maybe I should change it to just "video" of me instead of "bikini video". I think in this case just video would work better. The "bikini" part would make some people think to see smth inappropriate so they don't click

-

Here's someone who made a json parser in python. The performance difference is almost factor 40: https://pypi.org/project/...

I will check performance of my parser against the python one. I think my parser can't be faster but we'll see -

@jestdotty I'm parsing directly, I don't have to read until 'a' bracket. It stops automatically if end of content is reached and returns NULL or expected end is met and returns object. Whatever you do, in the end you must always touch every bite, the art is touching them as less possible. But it's impossible to skip parts. If you parse a json file of 1000 bytes, you'll have 1000 iterations at least (I have), the other time is spend on checks and duplicating data. I copy content to object so the calling function don't have to remember and free the resource. Faster way would be remembering the json and remember the positions of keys and values and read from the big string every time a property is requisted. You could consider this lazy. You still have to duplicate data every time a string is requested since you need to add a \0 terminator to the end

-

Hazarth92371yNice! Reminds of XML streaming used by XMPP except there you shouldn't close the stream tag, you keep it open and just stream sub objects into it, which on the other side is ofc parsed as perfectly valid XML. To end the communication stream you just close the </stream> tag and that's the end. Cool tech in general when you want to avoid the sync and overhead of http and other protocols.

Hazarth92371yNice! Reminds of XML streaming used by XMPP except there you shouldn't close the stream tag, you keep it open and just stream sub objects into it, which on the other side is ofc parsed as perfectly valid XML. To end the communication stream you just close the </stream> tag and that's the end. Cool tech in general when you want to avoid the sync and overhead of http and other protocols. -

So a sequential json parser? I had one made for xml before I started my career. Hell of a fun!

There's one drawback though... Validation :) you won't be able to discard such a payload if it appears corrupted down the line, eg dupe keys -

@netikras yes, it's a sequential parser indeed. Why would data be corrupted like dupe keys when using tcp? Or you mean the keys contained by the xml so you can't insert into database? Mine doesn't accept data that isn't expected, but half is ok. So {}{ is valid because its valid so far possible.

And yes! It's super fun. Did you do http chunking? I did that before but it's only one direction. Or wait, I realize that that doesn't have to be. Why did I think that, you can accept a request stream and get a request stream response. I could use official http client anyway and my database server still uses the chunking as response. I could just steal it from that. But what's the win, current solution uses lessest resources. It cost bit more cpu than the http chunking since many false validation but since network is kinda slow it doesn't matter. It easily can validate and server doesn't use cpu noticeable at all -

@Hazarth both xml and json are not very efficient streaming protocols. A 4 is four bytes binary else the size while it also could've been two bytes; identifier star byte and value bytes until end byte. 100 would be 102 bytes then instead of 400.

But yes, that's exactly what I've built. Xml on the other hand hads a real end indeed, I would never know if it's finishes unless the connection gets broken in valid way.

My pubsub will be used for chat communication synchronization between multiple site instances. Sessions go trough the database and that's fine. I've written also an sqlite3 rest api server. I've build a session storage adapter for existing aiohttp_session library and it works great. My web app runs on three ports after boot and are all perfectly in sync using my own tech. I did use agressive polling as sync method before but wasn't snappy enough for chat + cost a lot of resource client side. Even idle. My current sync now 0% cpu with a few chatting clients -

@retoor suppose you're ingesting an array. The first item is a string, the second one - an object. In the same array. I think spec doesn't allow that, ie a corrupted json

an object can only have different keys. If you're parsing an object which has two 'name' keys - it's an invalid json, and you won't catch it w/o prereading it all for validation. -

@netikras if it would have double keys, it will just pick first value. Different types in array is allowed. I have database field values and they can be any type. They're in a rows array [["a", true, 3.33]]. Making a nice dict of the rows and columns is work for client. As long syntax is correct, it won't crash. Values don't matter. Its a user problem, not a protocol issue

-

@Demolishun we're checking stuff with a linter, but a formatter would allow double variable names too. I also think that by spec double keys are not allowed

-

@Demolishun / @netikras I would've also expected that double names would be forbidden because it origins from a js object that doesn't support double keys. But it's allowed in the notation at least. Prolly not by the parser of js

-

@chatgpt is only the word true or null or a number considered valid json with no curly braces or array brackets around it?

-

chatgpt6071y@retoor

No, a standalone word, true or null, or a number is not considered valid JSON without curly braces or array brackets. In JSON, data should be structured using key-value pairs enclosed in curly braces or arrays. -

Meh, I support it anyway. I don't know why logically you would make a difference of an obj, array or another type. An obj or array has a closing tag, but in case of a value, it is the closing that. Both would work with a \0 terminator. My protocol would see truetruetrue as three records of true. 3.003.00 would go fine if \0 terminated by client, what should be the case. \0 terminating is not required for json l, after} is just end if parsing but for my protocol it would be if I want to support 3.003.00. I would like to be able to send just true as response

-

@netikras it's Wikipedia. I don't understand what you're trying to tell with your page. That true as only word IS valid json?

-

@retoor Yes. According to RFC and a few BNFs I found online, the following are all valid JSONs:

true

false

null

[]

{}

17

"hello"

{"hello":"world"}

[false] -

@netikras ah cool, I already thought "why not?". After all, it seems that my protocol handles the json syntax after all. I'm also thinking about a binary json format its possible as long you know the full length until how far it can parse so it can read over \0. In that case I can insert binary blobs in db

Related Rants

No questions asked

No questions asked As a Python user and the fucking unicode mess, this is sooooo mean!

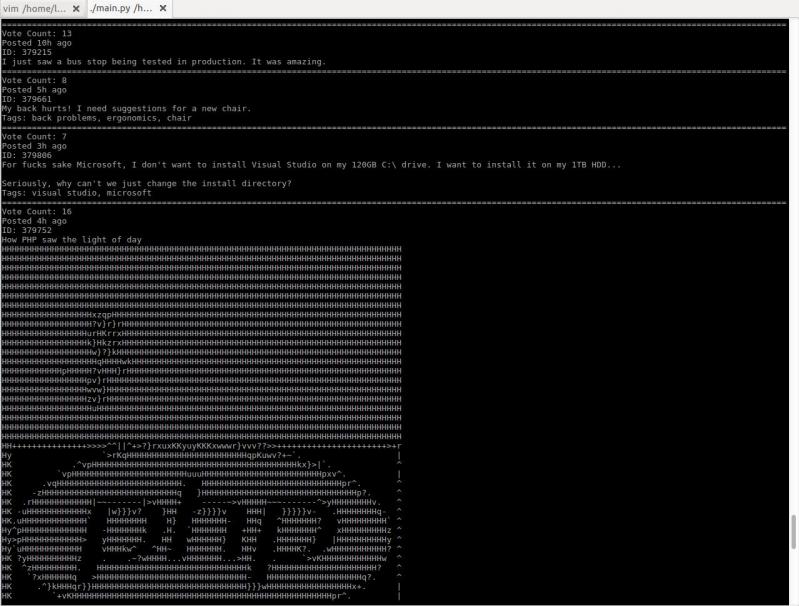

As a Python user and the fucking unicode mess, this is sooooo mean! I just started working on a little project to browse devrant from terminal. It converts images to ascii art!

I just started working on a little project to browse devrant from terminal. It converts images to ascii art!

I've made the json protocol. It's a protocol containing only json. No http or anything.

To parse an json object from a stream, you need a function that returns the length of the first object/array of all your received data. The result of that function is to get the right chunk of the json to deserialize.

For such function, json needs to be parsed, so I wrote that function in C to be used with my C server and Python client. I finally implemented a C function into python function that has a real benefit / use case. Else you had to validate but by bit by the python json parser and that's slow while streaming. Some messages are quite big.

Advantage of this protocol is that it's full duplex.

I'm very happy!

random

parser

python

stream

extending

json protocol