Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

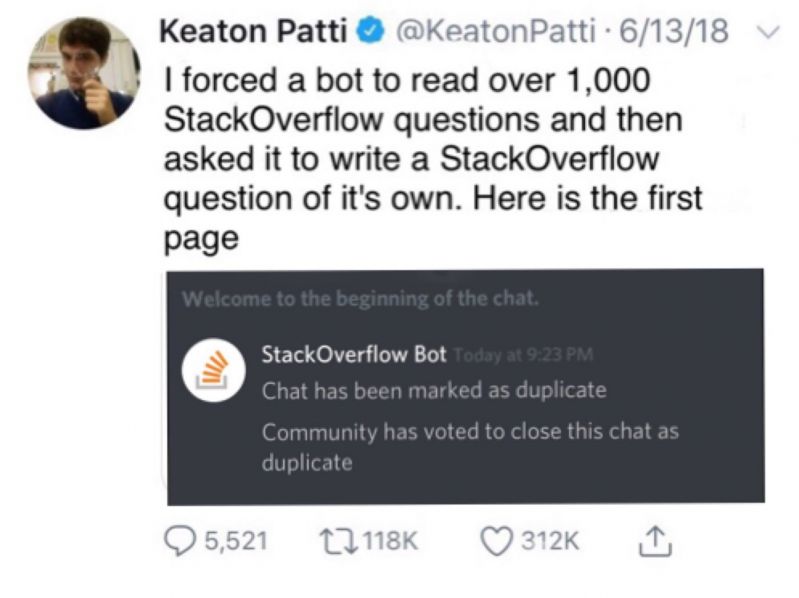

Machine Learning messed up!

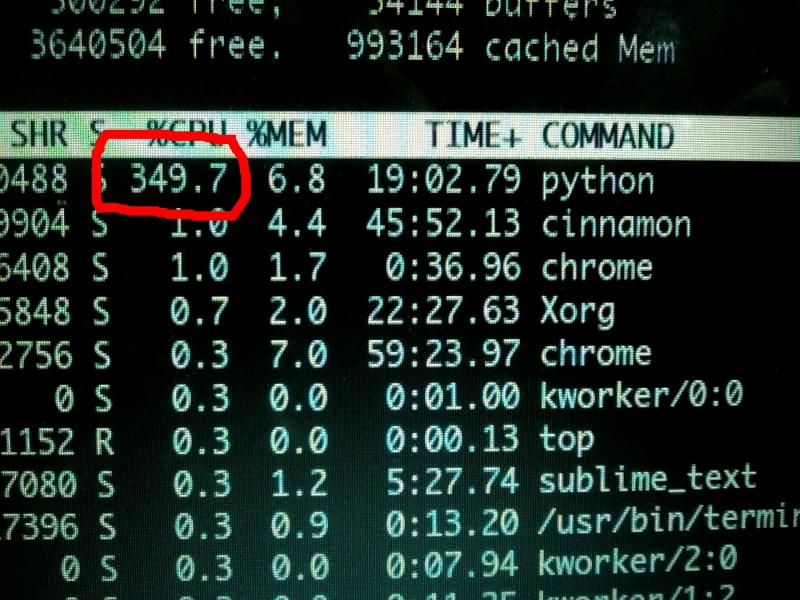

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

As you can see from the screenshot, its working.

The system is actually learning the associations between the digit sequence of semiprime hidden variables and known variables.

Training loss and value loss are super high at the moment and I'm using an absurdly small training set (10k sequence pairs). I'm running on the assumption that there is a very strong correlation between the structures (and that it isn't just all ephemeral).

This initial run is just to see if training an machine learning model is a viable approach.

Won't know for a while. Training loss could get very low (thats a good thing, indicating actual learning), only for it to spike later on, and if it does, I won't know if the sample size is too small, or if I need to do more training, or if the problem is actually intractable.

If or when that happens I'll experiment with different configurations like batch sizes, and more epochs, as well as upping the training set incrementally.

Either case, once the initial model is trained, I need to test it on samples never seen before (products I want to factor) and see if it generates some or all of the digits needed for rapid factorization.

Even partial digits would be a success here.

And I expect to create multiple training sets for each semiprime product and its unknown internal variables versus deriable known variables. The intersections of the sets, and what digits they have in common might be the best shot available for factorizing very large numbers in this approach.

Regardless, once I see that the model works at the small scale, the next step will be to increase the scope of the training data, and begin building out the distributed training platform so I can cut down the training time on a larger model.

I also want to train on random products of very large primes, just for variety and see what happens with that. But everything appears to be working. Working way better than I expected.

The model is running and learning to factorize primes from the set of identities I've been exploring for the last three fucking years.

Feels like things are paying off finally.

Will post updates specifically to this rant as they come. Probably once a day.

random

keras

machine learning