Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

No questions asked

No questions asked As a Python user and the fucking unicode mess, this is sooooo mean!

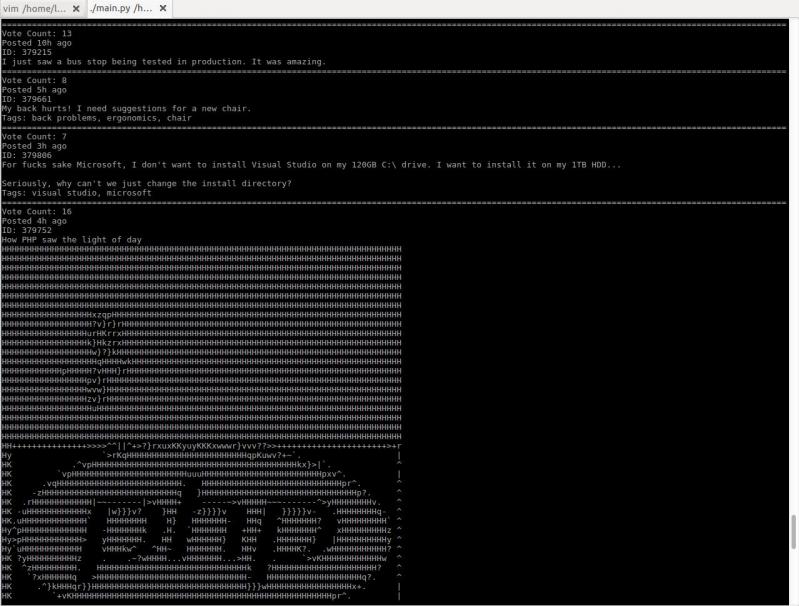

As a Python user and the fucking unicode mess, this is sooooo mean! I just started working on a little project to browse devrant from terminal. It converts images to ascii art!

I just started working on a little project to browse devrant from terminal. It converts images to ascii art!

It was my first time doing an NLP task / implementing a RNN and I was using the torchtext library to load and do sentiment analysis on the IMDB dataset. I was able to use collate_fn and batch_sampler and create a DataLoader but it gets exhausted after a single epoch. I’m not sure if this is the expected behavior, if it is then do I need to initialize a new DataLoader for every epoch? If not is something wrong with my implementation, please provide me the correct way to implement the same.

PS. I was following the official changelog() of torchtext from github

You can find my implementation here

changelog - https://github.com/pytorch/text/...

My implementation - https://colab.research.google.com/d...

question

deeplearning

python

pytorch

nlp