Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

pmso4065yI miss the times where memory weren't free.

pmso4065yI miss the times where memory weren't free.

People make stuff without thinking how much memory and storage it will take, if it will run in a PC or phone 5 years old , etc -

"Graphics are the responsibility of the graphics driver"

Not entirely. The low level - yes. High level - no. Image formats, scaling, composition is not part of the graphics driver.

We may discuss if this is the responsibility of the graphics library (GTK, whatever) - but nevertheless it hapoens inside the browser process.

One usually can offload video decoding to the hardware/driver, but not every hardware/driver supports every video format (so one needs software decoding at least as a fallback).

The same for sound: PulseAudio - if I skimmed the documentation correctly - wants a decoded audio signal, therefore one needs a decoder for multiple audio formats in the browser process. -

Condor315465y@sbiewald I would say that rendering (but not decoding) an image (or video for that matter) would be the engine's job. It should be able to hook into system libraries for that. Most media players do this as well (VLC being one of the few exceptions I know of). What the engine would need to do on its own would be looking at style properties for where to place it in the page, how large it needs to be, etc.

Condor315465y@sbiewald I would say that rendering (but not decoding) an image (or video for that matter) would be the engine's job. It should be able to hook into system libraries for that. Most media players do this as well (VLC being one of the few exceptions I know of). What the engine would need to do on its own would be looking at style properties for where to place it in the page, how large it needs to be, etc.

Same goes for audio decoding. You can decode MP3 files with libmp3lame for example. Same goes for pretty much every other format. None of this should be the browser's job. Yes its process has to make the call, that is true. But it doesn't need to implement any of this itself. -

Are u sure that it contains a renderer...

I would assume it uses the system rendering engine. -

Condor315465y@IntrusionCM That's actually a really good question! I do know that the TLS certificate store must be its own. The internal web browser does not trust any current certs, it's too old. For renderer differences I should perhaps look for inconsistencies in web page renders. It's a J2ME application, do those have much access to the rest of the system? Maybe it's part of the PSPKVM "game" you run to have the JVM to run those? I'm quite unsure about the internals regarding that, but very curious now.

Condor315465y@IntrusionCM That's actually a really good question! I do know that the TLS certificate store must be its own. The internal web browser does not trust any current certs, it's too old. For renderer differences I should perhaps look for inconsistencies in web page renders. It's a J2ME application, do those have much access to the rest of the system? Maybe it's part of the PSPKVM "game" you run to have the JVM to run those? I'm quite unsure about the internals regarding that, but very curious now. -

Yeah, the size of Opera Mini is impressive, but it doesn't support a lot of features. It is probably on paar with IE10-11, so browser support won't be any good nowadays.

-

Giving web application code access to your graphics and audio drivers (or any native code) is an incredibly bad idea for security. Same with access to system libraries. And, incidentally, also same with letting them pressure memory without a GC. There's a reason browsers go to incredible extents to sandbox your system away from all that. The sandbox needs to be well designed otherwise it's useless (look at eg. Java applets). It's really easy to think you can make a better browser but when you get down to it you start agreeing with the way existing vendors do it.

-

Condor315465y@RememberMe input validation! Yes anything you get from the interwebs (i.e. a website in this case) can be malicious. It should be strictly validated when being rendered. Maybe web browsers should be *more* strict there. Currently they are extremely lax.

Condor315465y@RememberMe input validation! Yes anything you get from the interwebs (i.e. a website in this case) can be malicious. It should be strictly validated when being rendered. Maybe web browsers should be *more* strict there. Currently they are extremely lax.

As for media, do you really think that the browser vendor whose business is making a *browser* is going to make a media library better than those whose job it is to make a media library? Also, how is a media file downloaded from the internet that you play in the browser different from a media file you download to disk and open in a native media viewer/player for it?

I've been in security for 4 years and I'm baffled. -

vane104395yyeah but it’s not cross compiled and not including graphics overhead cause it’s solved by java, wasm, video / audio encoders, drm, plugin systems and most important latest javascript / css / html support with backward compatibility for html 1 and javascript 1.0

vane104395yyeah but it’s not cross compiled and not including graphics overhead cause it’s solved by java, wasm, video / audio encoders, drm, plugin systems and most important latest javascript / css / html support with backward compatibility for html 1 and javascript 1.0

I think if some browser will drop backward compatibility it will be lightweight product -

Condor315465yOn my previous comment: validation is not the same as confinement. Browsers do go to extreme lengths to confine, and play every trick in the book to do so. But validation is not that. You get a correctly written website and it will render in any browser, strict or not. Yes even IE6 and a lot more than that too. Just because websites are written like shit, doesn't mean that browser vendors should bend themselves like a pretzel to just confine all the rancid shit.

Condor315465yOn my previous comment: validation is not the same as confinement. Browsers do go to extreme lengths to confine, and play every trick in the book to do so. But validation is not that. You get a correctly written website and it will render in any browser, strict or not. Yes even IE6 and a lot more than that too. Just because websites are written like shit, doesn't mean that browser vendors should bend themselves like a pretzel to just confine all the rancid shit. -

qviper1905yI remember running it on Nokia phone years agoe now I have opera in my pc and phone too.

qviper1905yI remember running it on Nokia phone years agoe now I have opera in my pc and phone too. -

@Condor you want to...validate code? Turing complete code? From randoms on the internet? Pretty much impossible because 1. you don't have a spec to validate against 2. even if you do it's not always possible to prove what a program does either because the proof is way complex for a prover to solve in reasonable time, or the proof is impossible (halting problem).

So what you do instead is create a well defined programming abstraction and libraries (like HTML5 media and webworkers etc.) so that no matter how nutty the code is you're always inside the abstraction...and oh look we're back at js/wasm.

I don't get your point about media libraries. Browsers export standard graphics interfaces like canvas and WebGL so that the entire web runs on one set of standards *and* is also sandboxed (full OpenGL access to my GPU? No way). Low level media handling *is* done by using platform specific libraries.

"Just because websites are written like shit [...] bend themselves like a pretzel" uh...yes it does mean that browser vendors should bend over backwards to get every possible thing working as well as possible, because if they don't their userbase will simply migrate to one that does. *Nobody* cares about the implementation of a website, they just want to use it. That's the whole point. -

Condor315465y@RememberMe I am well aware of the NP problem. Some code does not compute. As for validation.. let's see. Compilers like GCC constantly fuck me up on that. Interpreters such as bash, Python, whatever. Programming languages have to be well-defined so that you can write code in it in the first place.

Condor315465y@RememberMe I am well aware of the NP problem. Some code does not compute. As for validation.. let's see. Compilers like GCC constantly fuck me up on that. Interpreters such as bash, Python, whatever. Programming languages have to be well-defined so that you can write code in it in the first place.

HTML is well-defined, although you can certainly forget to close a tag and the browser will just deal with it. XML is even better and what I would strive for. And yes even JS or ECMA rather. It's also been written in a single afternoon.

Other than that you can run a separate engine process for every tab, duplicate all the memory for that.. and web devs can load several MB of crap just to make an image go blinky blinky. -

@Condor Not sure about PSP, but in android many browsers, e.g. Via, use the internal Webkit rendering engine and just provide a UI.

Regarding the rest...

HTML parsing is a thing where you have a specification.... You then take this specification, put it through a meat grinder and et voila you have a parser...

Joke aside, HTML parsing is guesswork excellence.

A lot of unusable HTML code must still work for compatibility reasons.

And I'm only talking about HTML.

Not CSS, which is yet another nightmare regarding ruleset and interpretation.

Regarding security and browsers and graphic card access...

Well... Given the cluster fuck security is hardware wise, I'll guess running on the browser is the least of our problems.

XD

Modern hardware is very complex, I wouldn't say I understand how complex nor the implications of security / design....

But if you read LWN / LKML / Phoronix and some other pages and combine this with CVE pages / scanners, it seems like a wonder that the world still runs and hasn't ended due to IT bugs. -

@Condor there's a difference between "well-defined" and "decidable". Languages are "well-defined" in that their semantics is understood and mechanised. Give me a program in any language and I'll know what to do with it. That does NOT mean an algorithm can figure out what it does and hence verify/validate it. That's decidability.

The kind of validation compilers do is a lot dumber and usually just syntax driven. As an example

// Program 1

x = 2;

// Program 2

x = 0;

for (i = 0; i < 36257388174628921; i++) {

x += pow(2, -i);

}

Assume infinite precision data types. These two do the same thing theoretically (evaluate to 2) but the second one will take forever and is an approximation, but I hope you see how hard it would be for an automated verification engine to reason that the two are similar somehow. No compiler does this level of analysis, they're actually quite limited. Yes you can easily validate certain classes of things (eg. uninitialised variables via liveness analysis) but those are just that - certain classes of things. Not everything.

Formal validation without expert help for every single program that anyone doing web dev is basically not going to happen. Also, this has nothing to do with NP hardness. NP hard programs are still solvable given enough time, they're just not polynomial time solvable (i.e. will be slow). The halting problem is even harder than that - it's impossible to solve for a general algorithm. -

Condor315465y@IntrusionCM Now that you mention it yes.. in Android you can change it in the developer options, but whatever is set there is what browsers will use. In Apple devices it must be Safari's engine too, and I don't think it's modifiable. In Linux too it's mostly webkit2gtk in pretty much every browser I looked at. It's also what the browser I made for shits and giggles uses. It's just the engine and I didn't write a UI on top of it, but it works. Single-tabbed, and it just takes a URL as an argument. The engine has done nearly all the hard work. At that point I think anyone and their cat can write a browser. But the work that these engines do, having to deal with the shitfest that is the modern web. Even with a gigantic resource footprint, they do it somehow.

Condor315465y@IntrusionCM Now that you mention it yes.. in Android you can change it in the developer options, but whatever is set there is what browsers will use. In Apple devices it must be Safari's engine too, and I don't think it's modifiable. In Linux too it's mostly webkit2gtk in pretty much every browser I looked at. It's also what the browser I made for shits and giggles uses. It's just the engine and I didn't write a UI on top of it, but it works. Single-tabbed, and it just takes a URL as an argument. The engine has done nearly all the hard work. At that point I think anyone and their cat can write a browser. But the work that these engines do, having to deal with the shitfest that is the modern web. Even with a gigantic resource footprint, they do it somehow. -

Condor315465y@RememberMe I see what you're trying to get to. There's many ways to skin a cat, and there's a number of ways to do the same thing. Interpreters and compilers do not check for that, and should not. If the code is syntactically valid, that's all they should care about. Whatever the program is doing, don't even bother.

Condor315465y@RememberMe I see what you're trying to get to. There's many ways to skin a cat, and there's a number of ways to do the same thing. Interpreters and compilers do not check for that, and should not. If the code is syntactically valid, that's all they should care about. Whatever the program is doing, don't even bother.

This is not a problem that's unique to web browsers either. I have run literal malware in VM's as part of my security research. Android phones take an elegant approach with separate users for each app, and groups for permissions. iPhones run everything as mobile but each app has its own sqlite database for data. And it works.

You may think, well that's the thing that a separate process for each tab does in browsers, confining it. But then for a dozen to a hundred tabs it'd better be efficient. And I'd like to raise the issue.. why can e.g. Facebook and Google track me with their JS across other sites, when browser vendors care so much about my security? -

Condor315465yAs far as engine efficiency goes, I have loaded my site in my own browser (https://git.ghnou.su/ghnou/browser). As you so nicely put it in my other post about that, I made a browser by using a browser. So yeah, might as well be a full browser then, not just its engine with the bare minimum of extras (which it actually is). It consumes 68MB of memory. My site (https://ghnou.su) is ridiculously efficient, no problems there. It loads instantly and doesn't need a fancy CDN for that. Yet it's still 68MB for around 10kB of site data. And I have entire containerized systems running production code (e.g. https://git.ghnou.su/ghnou/konata) that consume 3-5MB of RAM for that, depending on whether I am logged in and loaded a shell. In a single engine process I can fit 23 of those entire systems. Don't tell me that gigabytes of RAM for a dozen tabs is fine, regardless of the reasons for it. And with invasive companies waltzing right over the security aspect, it's not even being solved properly.

Condor315465yAs far as engine efficiency goes, I have loaded my site in my own browser (https://git.ghnou.su/ghnou/browser). As you so nicely put it in my other post about that, I made a browser by using a browser. So yeah, might as well be a full browser then, not just its engine with the bare minimum of extras (which it actually is). It consumes 68MB of memory. My site (https://ghnou.su) is ridiculously efficient, no problems there. It loads instantly and doesn't need a fancy CDN for that. Yet it's still 68MB for around 10kB of site data. And I have entire containerized systems running production code (e.g. https://git.ghnou.su/ghnou/konata) that consume 3-5MB of RAM for that, depending on whether I am logged in and loaded a shell. In a single engine process I can fit 23 of those entire systems. Don't tell me that gigabytes of RAM for a dozen tabs is fine, regardless of the reasons for it. And with invasive companies waltzing right over the security aspect, it's not even being solved properly. -

@Condor 68MB in memory is pretty impressive. That's one small website. Hardly a fair comparison to a relatively small app deployment though (the bot). If you want something even smaller, my d&d discord bot that I wrote in Rust and serves a bunch of servers just sips memory. I'm not sure what that proves though. It's like comparing a bike to a truck and berating the truck for being big and having a ton of space while the bike is "small and efficient".

Barebones websites are nice if you like that look. My own website is under 50KB total and is only HTML, CSS, and SVG artwork (space taken is mostly some of the stories I put up). Loads instantly, looks great. But that kind of extremely minimal design isn't going to work for many use cases so...also not sure what the point is there.

I agree HTML/CSS/JS could have been better designed in terms of efficiency though.

Browser security is actually pretty impressive for what all they have to deal with. Yes it could be better but even privacy focused browsers have difficulty covering everything. It's a really hard problem to solve while keeping everything else that's demanded of a browser. Also most of the tracking stuff is because of the code that's running, not exactly much the browser engine can do about it. That's more in the domain of eg. ublock origin or pihole or whatever firefox focus does to block trackers.

It's like fighting malware with compiler/linker technology - yes they can (and do) do a fuckton of stuff, like inserting stack canaries to detect overflows or memory range analysis or position independent code generation to stop address based attacks, but that's just one component of a multilayered defence. -

Condor315465y@RememberMe sorry, that bot project was supposed to be in comparison with another one I forgot to mention. Long stressful day, late at night etc...

Condor315465y@RememberMe sorry, that bot project was supposed to be in comparison with another one I forgot to mention. Long stressful day, late at night etc...

The bot it was inspired by is https://github.com/Nick80835/.... It consumes roughly 200-250MB memory average, and does roughly the same things as my bot (except a bit more modules / features, but the number of that isn't that relevant here). Don't get me wrong, it is a good bot. I looked at its code several times and it's well-written too. But nonetheless my bot is orders of magnitude more efficient. And compared to browsers.. those things are easily the heaviest thing on my entire systems. When it comes to that, what I would blame is maybe things like isEven (actual JS module) versus a modulo operation. Does the same thing but one is for retards while the other is not. But this is a bigger problem than that. The modern web is bloated... There's many places we can look for accusations and defenses. I think it should just be solved.

Related Rants

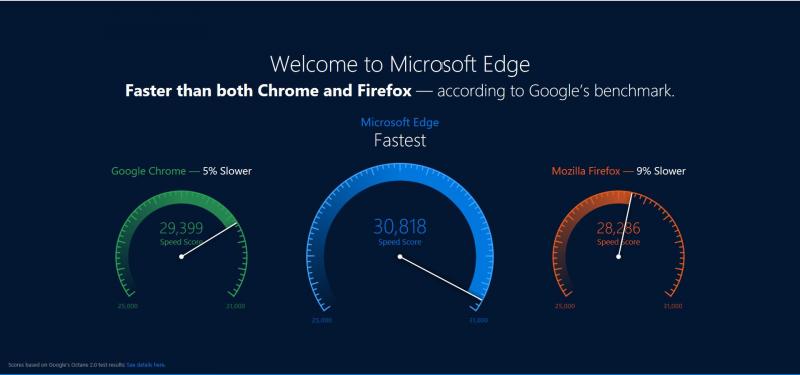

Sigh, Microsoft we need to talk...

Sigh, Microsoft we need to talk... This is hilarious 😂🤣🤣

This is hilarious 😂🤣🤣 "Close all the browser tabs" is the new "reboot the machine".

"Close all the browser tabs" is the new "reboot the machine".

I just installed Opera Mini on my PSP. That alone isn't very exciting on its own, although I am stoked that my website does in fact render on a device from 2009. With the helpful guidance of a laptop from 2004 that's doing the hotspot duties for this thing.

No, what really got me stoked is that Opera still supports these old platforms, and how small they managed to make it. The .jar file for Opera Mini 4.5 is ~800kB large. There's a .jad file as well but it's negligible in size and seems to be a signature of sorts.

Let that sink in for a moment. This entire web browser is 800kB. Firefox meanwhile consistently consumes 800 MEGABYTES.. in MEMORY. So then, I went to think for a moment, how on earth did they manage to cram an entire functioning web browser in 800kB? Hell, what makes up a web browser anyway?

The answer to that question I got to is as follows. You need an engine to render the web page you receive. You need a UI to make the browser look nice. And finally you need a certificate store to know which TLS certificates to trust. And while probably difficult to make, I think it should be possible to do in 800k. Seriously, think about it. How would you go *make* a web browser? Because I've already done that in the past.

Earlier I heard that you need graphics, audio, wasm, yada yada backends too.. no. Give your head a shake. Graphics are the responsibility of the graphics driver. A web browser shouldn't dabble with those at all. Audio, you connect to PulseAudio (in Linux at least) and you're done. Hell I don't even care about ALSA or OSS here. You just connect to the stuff that does that job for you. And WebAssembly.. God I could rant about that shit all day. How about making it a native application? Not like actual Assembly is used for BIOS and low-level drivers. And that we already have a better language for the more portable stuff called C.

Seriously, think about it. Opera - a reputable browser vendor - managed to do it in 800kB on a 12 year old device. Don't go full wank on your framework shit on the comments. And don't you fucking dare to tell me that there's more to it. They did it for crying out loud. Now you take a look at your shitpile for JS code and refactor that shit already. Thank you.

rant

minimalism

browsers

web devs