Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

stacked26287yI should publish a paper titled "Generating Random Numbers Using Failed Machine Learning Attempts".

stacked26287yI should publish a paper titled "Generating Random Numbers Using Failed Machine Learning Attempts".

Abstract: "The random number generators here presented are slow and predictable. Their implementation details are long and complicated. They should not be used by anyone." -

Op, lets work together on an article or blog that we can call "what happens when web devs refuse to learn math for ML"

I seriously believe that the biggest issue is people refusing to learn the essential mathematical methods that would let them see how much they are failing. Sadly....people just est that shit up -

stacked26287y@AleCx04 the "web devs" in my case are senior software engineers, and some of them are currently undergoing ML courses (those probably cause more damage than everything else)

stacked26287y@AleCx04 the "web devs" in my case are senior software engineers, and some of them are currently undergoing ML courses (those probably cause more damage than everything else) -

stacked26287y@NoMad lol, I wouldn't be surprised if giving only 1s would make 100% accuracy.

stacked26287y@NoMad lol, I wouldn't be surprised if giving only 1s would make 100% accuracy.

I've seen people testing only with positive cases, completely forgetting about the negative ones. And while this may seem hilarious, this actually happens very frequently here where I work because we store only the positives (which makes sense for what our service does, but doesn't make sense if you want to do some data science: for that you need to use the raw values from the source).

Related Rants

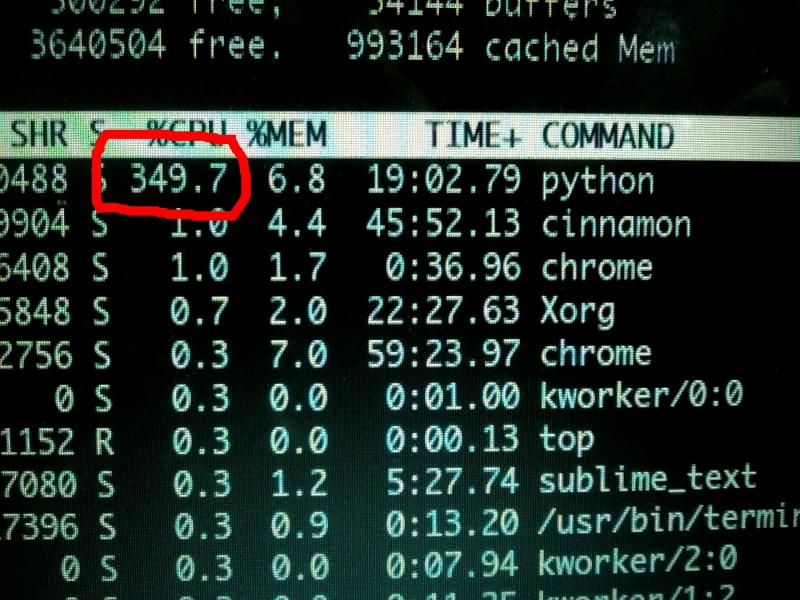

Machine Learning messed up!

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

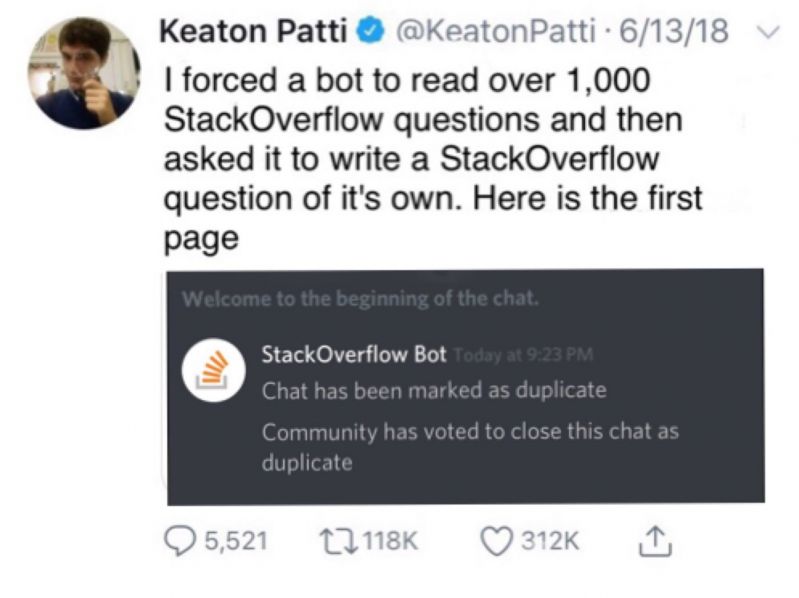

Tired of hearing "our ML model has 51% accuracy! That's a big win!"

No, asshole, what you just built is a fucking random number generator, and a crappy one moreover.

You cannot do worse than 50%. If you had a binary classification model that was 10% accurate, that would be a win. You would just need to invert the output of the model, and you'd instantly get 90% accuracy.

50% accuracy is what you get by flipping coins. And you can achieve that with 1 line of code.

rant

machine learning