Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

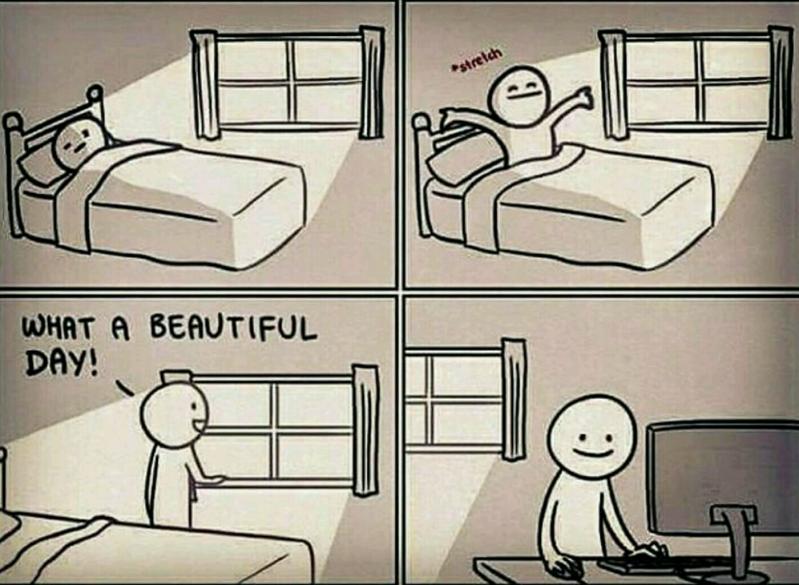

Is it just me or are you like this too? 😆 #devLife

Is it just me or are you like this too? 😆 #devLife

So, What I'm trying to achieve!

I have a video uploading service in spring boot where I'm generating s3 presigned URL and let the client to upload a video to s3 bucket. And I wanna push it into a rabbitMQ queue as a job for my consumer for further processing as soon as it successfully uploaded to my s3 bucket.

Here is my approach!

On client side when I successfully got the video uploaded status, I will trigger a webhook on backend side to get the uploaded video from s3 and push it into queue for further processing. Ofcourse I'm considering the retry logic here. So, the problem is if for some reason the webhook fails then there I will got a dead video in my s3 bucket which will never going to be process.

How did I handle this case?

I'm using Polling approach, during the presigned URL creation I will create a record in db(preferable reddis) and labeled as "pending", if webhook successfully run it reset target video as “processing” if for some reason webhook fails then I run a schedular in every 5 minutes to checks reddis record for any "pending" records older than X minutes(How long to wait before considering webhook failed). Check If object exists in S3 but still "pending" publish to rabbitMQ.

I’m just a noob engineer who is trying to learn new things. ^w^

Sorry for my bad grammar I hope, I’m successfully convey my though process!

question

webhook

backend

transcoding

video-processing

s3